Seamless operations and system resilience are critical concerns in modern business IT, and failover cluster technology can play a major role in achieving these goals. This article delves deep into the core of failover clusters, exploring their functionality and applications. Whether you’re a seasoned IT professional or a curious enthusiast, read on to understand the intricacies of failover clusters, discover how they work, and see scenarios where they prove indispensable.

What is a failover cluster?

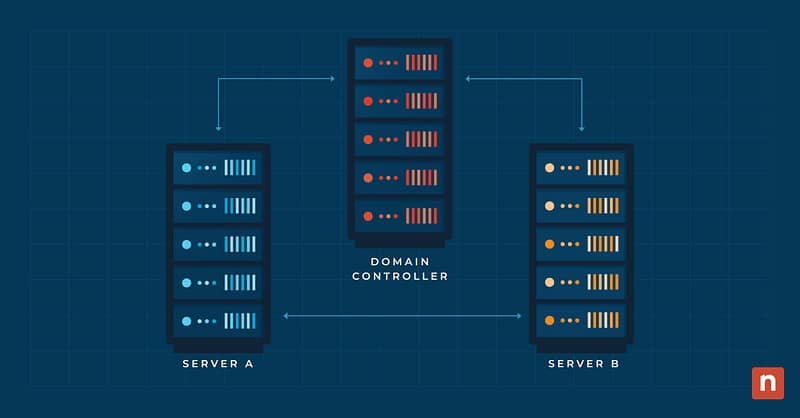

A failover cluster is a specialized form of high-availability infrastructure designed to ensure uninterrupted operation in the event of hardware or software failures. In essence, it is a group of interconnected computers working together as a single system to provide redundancy and continuous availability. The primary purpose of a failover cluster is to detect failures in one component of the system and automatically transfer the workload to a functioning component without any noticeable disruption to users.

The key components of a failover cluster typically include multiple servers (or nodes), shared storage resources, and cluster management software that monitors the health and status of the nodes. When a failure like a hardware malfunction is detected, the cluster management software orchestrates the failover process. This involves shifting the workload and responsibilities from the failing node to a healthy one, ensuring that the services or applications remain accessible and operational.

High-availability vs. continuous-availability failover clustering

High availability refers to the capability of a system or service to minimize downtime by quickly recovering from failures. High-availability failover clusters typically have the means to detect a failure, automatically initiate a failover process, and resume operations on a standby node. The goal is to reduce downtime to a minimum, ensuring that services are quickly restored without causing significant disruptions.

Continuous availability failover clusters are slightly different in that they emphasize maintaining uninterrupted service with minimal to zero downtime. Continuous availability involves a seamless transition of services from a failed node to a healthy one, ensuring end-users experience no noticeable interruption. This typically requires synchronous replication of data to the standby node.

Safety and security through redundancy

Redundancy is a fundamental concept in the context of failover clustering, and it refers to the inclusion of duplicate components or systems within a cluster to ensure reliability and fault tolerance. The primary goal of redundancy is to eliminate single points of failure, which are vulnerabilities in a system that could lead to service interruptions or data loss if compromised. In the context of failover clustering, redundancy plays a crucial role in maintaining continuous operations even when one or more components experience failures.

In a failover cluster, redundancy is implemented in various ways:

- Node redundancy: Failover clusters consist of multiple nodes, or individual servers, which work together as a unified system. If one node fails due to hardware or software issues, the remaining nodes in the cluster take over the workload to prevent downtime. This node redundancy ensures that even if one server becomes unavailable, the services or applications can seamlessly failover to another functioning node.

- Shared storage redundancy: Clusters often use shared storage resources, such as Storage Area Networks (SANs), to maintain consistent data across all nodes. Redundancy in shared storage is achieved by using technologies like RAID (Redundant Array of Independent Disks) to protect against disk failures. This ensures that even if a storage device fails, the data remains accessible through redundant storage mechanisms. To explore these concepts in more detail, including practical guidance on how SANs are implemented and why they’re beneficial, check out What is a Storage Area Network (SAN)? Benefits & Implementation.

- Network redundancy: Communication is vital among cluster nodes for coordination and data synchronization. Network redundancy involves having multiple network paths and interfaces to ensure that the cluster can continue communicating through alternative paths if one network connection fails. This helps prevent the isolation of nodes and ensures continuous availability.

- Power and hardware redundancy: Redundant power supplies, cooling systems, and other hardware components contribute to the overall reliability of the failover cluster. These measures protect against failures caused by power outages or hardware malfunctions.

The importance of failover clustering

Failover clustering is paramount in modern IT infrastructure, addressing critical aspects such as high availability and ensuring continuous data and service accessibility. The following is an exploration of its significance in detail:

- Availability:

High availability is crucial for systems and applications that demand constant accessibility. By distributing workloads across multiple nodes, failover clusters ensure that if one node encounters a failure, the services or applications seamlessly switch to a healthy node. Whether in business-critical applications, databases, or web services, maintaining availability is essential for meeting user expectations and ensuring uninterrupted operations.

- Downtime mitigation:

Downtime can have severe consequences for businesses, leading to lost productivity, revenue, and customer satisfaction. Failover clustering mitigates downtime by swiftly responding to hardware or software failures. The automatic failover mechanism ensures that the workload is transparently shifted to another functioning node in the event of a node failure, minimizing the time it takes to regain server availability.

- Data and service continuity:

The continuity of data and services is a core focus of failover clustering. By incorporating redundant components and failover mechanisms, clusters allow organizations to maintain uninterrupted access to critical data and services. Shared storage redundancy ensures data consistency across nodes, while failover mechanisms for applications and services guarantee that users can continue their tasks seamlessly, even in the face of unexpected failures. This level of continuity is vital for preserving data integrity, meeting service level agreements (SLAs), and building a resilient IT infrastructure that can withstand various challenges.

- Resource optimization:

Failover clusters enable efficient use of resources by dynamically allocating workloads based on the health and capacity of each node. This optimization helps organizations make the most of their hardware investments, ensuring that computing resources are allocated where they are most needed at any given time.

- Simplified maintenance:

Failover clusters facilitate maintenance activities without causing disruptions to services. By allowing administrators to take nodes offline for maintenance or upgrades while the remaining nodes continue to handle the workload, clusters support a non-disruptive approach to system maintenance.

Types of failover clusters

Failover clusters are categorized into two main types: shared-nothing and shared-disk clusters, each tailored to specific requirements. The choice between these types of clusters depends on factors such as the specific use case, scalability needs, and the organizational willingness to manage complexity.

- Shared-nothing failover clusters

In a shared-nothing failover cluster, individual nodes operate independently, each with its dedicated set of resources, including local storage. These clusters utilize a common network for communication, and while they may employ shared storage for configuration or as a witness disk, application data is not shared directly. Shared-nothing clusters are often preferred for their simplicity and reduced risk of a single point of failure. However, they may have limitations in terms of scalability and potential network traffic increase during failovers.

- Shared-disk failover clusters

These failover clusters provide a centralized storage system, typically a Storage Area Network (SAN) or Network Attached Storage (NAS), where all nodes share access to application data. A shared-disk cluster offers scalability by allowing additional nodes to increase processing power and storage capacity. However, shared-disk failover clusters tend to be more complex to set up and manage due to shared storage configurations. There may be concerns about resource contention as multiple nodes access the same storage.

Hyper-V, Windows Server, VMware Failover Clusters

Certain types of failover clusters are associated with popular virtualization platforms like Hyper-V (Microsoft) and VMware. Let’s explore the different failover cluster types in the context of these platforms:

Hyper-V Failover Cluster Types

Hyper-V Host Clusters

In a Hyper-V cluster, multiple physical servers (the aforementioned nodes) are clustered to collectively host and manage virtual machines (VMs). If one node fails, the VMs automatically failover to another healthy node within the cluster, ensuring continuous availability.

Advantages:

- High availability for virtualized workloads.

- Automatic VM migration in case of node failure.

Considerations:

- Requires shared storage for VM files.

Hyper-V Replica

Hyper-V Replica provides asynchronous replication of VMs from one Hyper-V host to another. In the event of a primary host failure, the replicated VM can be activated on the secondary host, minimizing downtime.

Advantages:

- Geographical separation for disaster recovery.

- Asynchronous replication for flexibility.

Considerations:

- Involves a degree of data loss in case of failover.

Windows Server Failover Cluster (WSFC) Types

File Server Failover Clusters

Windows Server Failover Clusters (WSFCs) can be configured to provide high availability for file server services. Multiple nodes work together to ensure access to shared files and folders, with automatic failover in case of a node or service failure.

Advantages:

- Continuous file access for users.

- Automatic recovery from node failures.

Considerations:

- Requires shared storage for shared files.

SQL Server Failover Clusters

Failover clusters can be implemented to provide high availability for SQL Server databases. Multiple nodes host SQL Server instances, and in the event of a failure, services seamlessly fail over to another node.

Advantages:

- Continuous availability of SQL Server databases.

- Automatic failover for minimal downtime.

Considerations:

- Requires shared storage for database files.

VMware Failover Cluster Types

vSphere High Availability (HA)

VMware vSphere HA provides high availability for virtual machines in a vSphere cluster. If a host fails, VMs are automatically restarted on other hosts within the cluster service.

Advantages:

- Automatic restart of VMs for continuous operation.

- No need for shared storage.

Considerations:

- May involve a brief VM downtime during failover.

Fault Tolerance (FT)

VMware FT provides continuous availability by maintaining a secondary copy of a VM on another host. If the primary host fails, the secondary VM takes over without interruption.

Advantages:

Zero downtime during host failures.

Continuous protection at the VM level.

Considerations:

Requires shared storage and specific hardware support.

Hybrid Cluster Variations

These configurations combine elements from different technologies or platforms to achieve specific goals. Two examples of hybrid clusters are SQL Server Failover Clusters and Red Hat Linux Clusters. We’ll explore these below:

SQL Server Failover Clusters

An SQL Server Failover Cluster is a hybrid cluster configuration that combines Microsoft’s SQL Server with Windows Server Failover Clustering. In this setup, multiple nodes host SQL Server instances, and the failover cluster ensures high database availability.

Advantages:

- Continuous availability of SQL Server databases.

- Automatic failover in case of node or service failures.

- Utilizes Windows Server Failover Clustering for reliability.

Considerations:

- Requires shared storage for database files.

- Windows Server Failover Clustering features are integral to the SQL Server failover capabilities.

Red Hat Linux Clusters

Red Hat Linux Clusters, often built with technologies like Pacemaker and Corosync, create high-availability configurations for applications and services on Linux-based systems. These clusters can be hybrid in the sense that they may integrate with storage solutions from different vendors, virtualization technologies, or cloud services.

Advantages:

- High availability for Linux-based applications and services.

- Flexibility to integrate with various storage and virtualization technologies.

- Suitable for on-premises or cloud-based deployments.

Considerations:

- Requires understanding and configuration of Linux clustering technologies.

- Hybrid aspects may include compatibility considerations with third-party solutions.

- These hybrid cluster variations showcase the flexibility and adaptability of clustering technologies to meet specific needs across different platforms.

How Failover Clusters Work

Failover clusters work by establishing a group of interconnected servers, referred to as nodes, that collaborate to ensure high availability and continuous operation of services or applications. The primary goal is to detect and respond to hardware or software-related failures to minimize downtime and maintain service continuity. The mechanism involves the following key steps:

- Cluster formation

Multiple nodes are connected to a shared network, creating a cluster. The cluster may use shared storage or other shared resources, depending on the type of cluster, as outlined in the previous section.

- Health monitoring

Cluster management software continuously monitors the health and status of each node within the cluster. Monitoring includes checking for hardware failures, network connectivity, and the availability of critical services.

- Heartbeat communication

Nodes communicate with each other through a process called heartbeat communication.Heartbeats are signals exchanged between nodes at regular intervals to confirm the health and availability of each node. If a node stops sending heartbeats or is otherwise deemed unhealthy, the cluster management software identifies the node as having a potential issue.

- Resource ownership and failover policies

Each node in the cluster is capable of hosting and managing the services or applications.Failover policies and resource ownership rules dictate which node is responsible for specific services at any given time. These policies ensure that another healthy node can take over the workload if a node fails.

- Automatic failover

The cluster management software triggers an automatic failover process when a failure is detected. The failover involves transitioning the services or applications from the failed node to a healthy node within the cluster. This process is typically transparent to users and ensures minimal disruption.

- Shared storage (optional)

In some failover clusters, shared storage is used to store application data or configurations. Shared storage allows for consistent data access across nodes and facilitates seamless failover.

- Manual intervention (optional)

Administrators may need to intervene manually in certain scenarios, especially during planned maintenance activities or when dealing with specific issues. Manual interventions ensure that the failover process aligns with organizational requirements and policies.

- Recovery and redundancy

After the failover, the cluster continues to operate with the remaining healthy nodes. Once the failed node is restored or a replacement is brought online, the cluster can rebalance its resources or return to its original configuration.

By following these steps, failover clusters provide a robust solution for maintaining high availability and minimizing downtime in critical computing environments.

Failover Clustering Applications

Failover clusters find applications across various industries, offering robust solutions for ensuring business continuity, disaster recovery, scalability, and load balancing. These real-world examples illustrate their versatility:

Finance: Business continuity and disaster recovery (BCDR)

Banks and financial institutions deploy failover clusters to ensure the continuous availability of critical services, such as online banking and transaction processing. In the event of a server failure, failover mechanisms guarantee uninterrupted service, preventing financial disruptions for customers.

E-commerce platforms: Maximum uptime

Online retailers leverage failover clusters to ensure their e-commerce websites remain operational during high-traffic periods or in the face of server failures. This continuous availability is crucial for maintaining customer satisfaction and revenue.

Cloud providers: Scalability and load balancing

Cloud providers use failover clusters to scale their services dynamically. As demand increases, new nodes can be added to the cluster to distribute the workload efficiently. Load balancing mechanisms ensure optimal resource utilization, offering customers reliable and scalable cloud services.

Content delivery networks (CDNs): Speed and efficiency

CDNs employ failover clusters to ensure quick and reliable content delivery. Nodes worldwide can handle user requests, and the failover process redirects traffic seamlessly to healthy nodes, preventing service interruptions and optimizing content delivery.

Telecommunications networks: Reliable connectivity

Telecom companies deploy failover clusters in their networks to handle voice and data services. In case of network congestion or hardware failures, failover ensures that communication services are rerouted to alternative nodes, maintaining connectivity for users.

Manufacturing: Disaster recovery planning

Manufacturing facilities use failover clusters to ensure the continuity of production and supply chain systems. In a server failure, failover guarantees that manufacturing processes and order fulfillment remain unaffected, minimizing disruptions.

Government and public services: Continuity of government

Government agencies deploy failover clusters for vital public services, such as tax processing or emergency response systems. The failover capability ensures that essential government functions continue, even during unexpected events or disasters.

Want to improve system uptime? Watch what is a failover cluster? How it works & applications.

The impact of failover clustering

As you’ve seen, failover clustering is pivotal in ensuring the uninterrupted operation of critical IT systems across diverse industries. This robust technology, exemplified in real-world applications, is essential for business continuity, disaster recovery, scalability, and load balancing.

The importance of failover clustering in IT lies in its ability to maintain high availability, optimize resource utilization, and contribute significantly to the resilience and efficiency of organizations in an ever-evolving technological landscape.

If you’re looking to build a robust IT infrastructure, we encourage you to explore failover clustering further.